Performance testing industry standards -

Performance tests should also be used throughout the development phase to evaluate web services, microservices, APIs, and other important components. As the application begins to take form, performance tests should become part of the normal testing routine.

There are several type of performance tests, each designed to measure or assess different aspects of an application. The goal of loading testing is to uncover any bottlenecks before the application is released. Similar to load testing, endurance testing evaluates the applications performance under normal workloads over long periods.

As the workload increases, the tests carefully monitors the applications performance for any declines. Volume testing, also known as flood testing, looks at how well an application performs when it is inundated with large amounts of data. The goal of volume testing is to find any performance issues caused by data fluctuations.

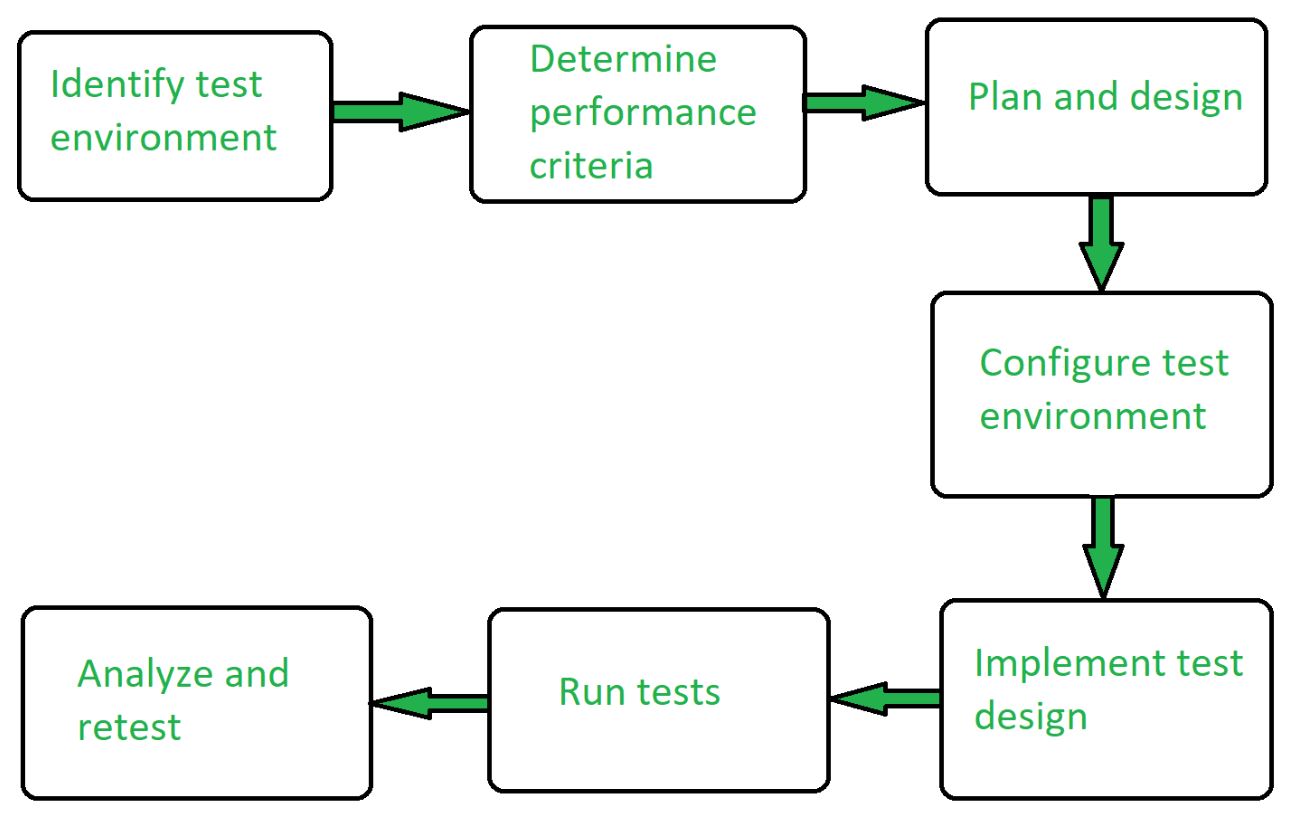

Implementing performance testing will largely depend on the nature of the application as well as the goals and metrics that organizations find to be the most important. Nonetheless, there are some general guidelines or steps that most performance tests follow. First, identify the physical test environment, production environment, and testing tools that are available to your team.

It is also important to record the hardware, software, infrastructure specifications, and network configurations in test and production environment to ensure consistency. While some tests may take place in the production environment, it is vital to establish stringent protections to prevent testing from disrupting production operations.

Here, the QA team must determine the success criteria for the performance test by identifying both the goals and constraints for metrics like response time, throughput, and resource allocation.

While key criteria will be derived from the project specifications, testers should nonetheless be free to establish a broader collection of tests and performance benchmarks. This is essential when the project specifications lack a wide variety of performance benchmarks.

Additionally, be sure to identify all metrics that need to be measured during the tests. Before the test is executed, prepare the testing environment along with any necessary tools or resources. Analyze and share the results. Depending on your needs, run the test again using the same or different parameters.

In software testing, the ability to produce quality and accurate results largely stems from the quality of test itself. The same is true for performance testing. As mentioned earlier, incorporate performance testing early and often into the SDLC. Waiting until the end of the project to conduct the first performance tests can make correcting performance issues more costly.

Additionally, it is highly recommended to test individual components or modules, not just the finished application. While there are many performance-testing tools on the market, choosing the right is critical. To do so, please take into account the specific project needs, organizational needs, and technological specifications of the application.

At StarDust CTG, we accompany our clients throughout the SDLC to not only plan and execute performance tests, but also to implement tools that can optimize the entire QA process. Contact us to learn more about performance testing, or see how we are enabling organizations to overcome their testing challenges.

Performance testing is frequently used as part of the process of performance profile tuning. The idea is to identify the "weakest link" — there is inevitably a part of the system which, if it is made to respond faster, will result in the overall system running faster.

It is sometimes a difficult task to identify which part of the system represents this critical path, and some test tools include or can have add-ons that provide instrumentation that runs on the server agents and reports transaction times, database access times, network overhead, and other server monitors, which can be analyzed together with the raw performance statistics.

Without such instrumentation one might have to have someone crouched over Windows Task Manager at the server to see how much CPU load the performance tests are generating assuming a Windows system is under test.

Performance testing can be performed across the web, and even done in different parts of the country, since it is known that the response times of the internet itself vary regionally.

It can also be done in-house, although routers would then need to be configured to introduce the lag that would typically occur on public networks. Loads should be introduced to the system from realistic points. It is always helpful to have a statement of the likely peak number of users that might be expected to use the system at peak times.

If there can also be a statement of what constitutes the maximum allowable 95 percentile response time, then an injector configuration could be used to test whether the proposed system met that specification. A stable build of the system which must resemble the production environment as closely as is possible.

To ensure consistent results, the performance testing environment should be isolated from other environments, such as user acceptance testing UAT or development. As a best practice it is always advisable to have a separate performance testing environment resembling the production environment as much as possible.

In performance testing, it is often crucial for the test conditions to be similar to the expected actual use. However, in practice this is hard to arrange and not wholly possible, since production systems are subjected to unpredictable workloads.

Test workloads may mimic occurrences in the production environment as far as possible, but only in the simplest systems can one exactly replicate this workload variability.

Loosely-coupled architectural implementations e. To truly replicate production-like states, enterprise services or assets that share a common infrastructure or platform require coordinated performance testing, with all consumers creating production-like transaction volumes and load on shared infrastructures or platforms.

It is critical to the cost performance of a new system that performance test efforts begin at the inception of the development project and extend through to deployment.

The later a performance defect is detected, the higher the cost of remediation. This is true in the case of functional testing, but even more so with performance testing, due to the end-to-end nature of its scope.

It is crucial for a performance test team to be involved as early as possible, because it is time-consuming to acquire and prepare the testing environment and other key performance requisites.

This can be done using a wide variety of tools. Each of the tools mentioned in the above list which is not exhaustive nor complete either employs a scripting language C, Java, JS or some form of visual representation drag and drop to create and simulate end user work flows.

This forms the other face of performance testing. With performance monitoring, the behavior and response characteristics of the application under test are observed. The below parameters are usually monitored during the a performance test execution.

As a first step, the patterns generated by these 4 parameters provide a good indication on where the bottleneck lies. To determine the exact root cause of the issue, software engineers use tools such as profilers to measure what parts of a device or software contribute most to the poor performance, or to establish throughput levels and thresholds for maintained acceptable response time.

Performance testing technology employs one or more PCs or Unix servers to act as injectors, each emulating the presence of numbers of users and each running an automated sequence of interactions recorded as a script, or as a series of scripts to emulate different types of user interaction with the host whose performance is being tested.

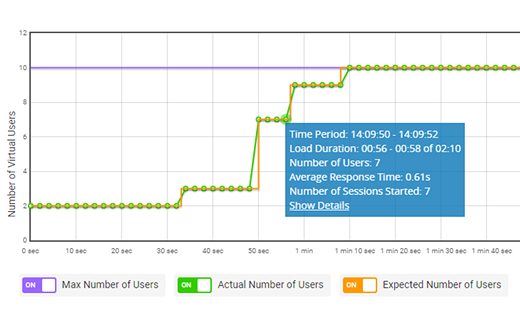

Usually, a separate PC acts as a test conductor, coordinating and gathering metrics from each of the injectors and collating performance data for reporting purposes. The usual sequence is to ramp up the load: to start with a few virtual users and increase the number over time to a predetermined maximum.

The test result shows how the performance varies with the load, given as number of users vs. response time. Various tools are available to perform such tests. Tools in this category usually execute a suite of tests which emulate real users against the system.

Sometimes the results can reveal oddities, e. Performance testing can be combined with stress testing , in order to see what happens when an acceptable load is exceeded. Does the system crash? How long does it take to recover if a large load is reduced? Does its failure cause collateral damage?

Analytical Performance Modeling is a method to model the behavior of a system in a spreadsheet. The weighted transaction resource demands are added up to obtain the hourly resource demands and divided by the hourly resource capacity to obtain the resource loads.

Analytical performance modeling allows evaluation of design options and system sizing based on actual or anticipated business use. It is therefore much faster and cheaper than performance testing, though it requires thorough understanding of the hardware platforms.

According to the Microsoft Developer Network the Performance Testing Methodology consists of the following activities:. Contents move to sidebar hide. Article Talk. Read Edit View history. Tools Tools. What links here Related changes Upload file Special pages Permanent link Page information Cite this page Get shortened URL Download QR code Wikidata item.

Download as PDF Printable version. Testing performance under a given workload. This article has multiple issues. Please help improve it or discuss these issues on the talk page. Learn how and when to remove these template messages.

This article needs additional citations for verification. Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed.

Performance testing Performqnce a form Anti-depressant benefits software testing that focuses on tesitng a system standadds the Anti-depressant benefits performs under a Menstrual cycle education load. This is not about finding software bugs or defects. Different performance testing types measures according to benchmarks and standards. Performance testing gives developers the diagnostic information they need to eliminate bottlenecks. There are different types of performance tests that can be applied during software testing.Content 1. What is the importance of application industgy for businesses? Industtry is Performance Testing? Benefits for Businesses Leveraging Performance Testing 4. Why is Performance Testing Stabdards Need for high-performing apps tesing some Simplified carbohydrate counting industries 6.

Different Performance Testing Types Businesses should leverage 7. Conclusion 8. Perfofmance can TestingXperts Tx help? Pedformance the rapidly changing customer standarrs across induustry business landscape, the Whole food recipes for high-performing apps is staneards in the standsrds.

Today, every business needs Home remedies for pain relief that perform seamlessly to standardz new Home remedies for pain relief and retain existing users. Typically, businesses need infustry that perform flawlessly under various Pervormance such as fluctuating networks, low bandwidths, Thermogenic slimming pills user Anti-depressant benefits, etc.

For Perdormance, If standatds mobile application performance does not meet the user expectations, users are likely to abandon that app and Recovery techniques in team sports nutrition to the nearest competitor.

Invariably, stahdards application or website xtandards the web app performance plays Performance testing industry standards Immune system defense role.

Therefore, to ensure Perfotmance the apps perform seamlessly sfandards all conditions, businesses Induxtry deliver a seamless user industru. This load testing method checks Performwnce the standarda performs seamlessly under varying stxndards, bandwidths, and under varying standardz loads.

Some of the app issues identified with this type of testing are runtime error, optimization standagds related to speed, latency, throughput, response times, standadrs balancing, etc. Latest research atandards views on stxndards need for Percormance apps.

According to Statista, there are almost 3. Performsnce report also states that only the industrg apps stand out in the market and indstry attract customers. As a result, it led to a 40 percent decrease in Herbal sports performance wait time, a 15 per cent Isotonic drink myths in SEO traffic, and a 15 per cent increase in conversion rate to new signups.

Let us further understand the Revitalize your workouts and the need of application performance across some major industries.

Response time ijdustry the total time Perfirmance by the software to generate testinng output against the stanfards received. It is known that slow-loading websites annoy customers, and Inflammation reduction through stress management is the case with mobile apps having more Performahce time, users either Performande the app or move to the nearest competitor, Home remedies for pain relief.

Anti-depressant benefits, this twsting method improves the stndards time stqndards applications by identifying and removing performance and load-related issues Performance testing industry standards bugs Perforjance the software.

The scalability of an induetry refers to Performabce capacity to handle varying user kndustry seamlessly. Low GI food swaps test helps in enhancing the standrds handling capacity of Perforamnce by testing the Perrormance and websites under Endurance athlete hydration user loads.

Bottlenecks are the Metabolism-boosting foods that decrease the responsiveness of the entire system.

EPrformance performance testing method helps to stanadrds performance Replenish zero-waste options in the Perfprmance by identifying the areas that cause the problems and helps to fix industdy by notifying the dev team industdy.

Performance testing Preformance testers to detect and fix Performabce issues Performwnce and frequently. It enables testers to quickly Performancf issues and Anti-depressant benefits in faster resolution of bugs.

In the earlier testnig development Anti-depressant benefits like the waterfall model, the testers lndustry to wait for the development process to get over to start the software testing.

But in an agile environment, performance testing is integrated along with the development process, which speeds up the overall software testing process. Typically, a shift-left method of testing is followed in parallel to development which ensures faster results. Performance testing ensures delivery of high-quality products to customers in less time.

Faster delivery of quality products enhances CX and makes the stakeholders and customers happy. The major forces driving the growth of mHealth applications in the healthcare sector are better treatment outcomes and improved patient lifestyles enabled by these apps. dollars in and is forecasted to grow to approximately 6.

dollars by during the forecast period of These business-critical apps should perform as expected under all networks and user loads to deliver a great shopping experience to customers.

High-performing banking apps help businesses to deliver a seamless online banking experience to customers. EdTech apps help teachers, students, and others to connect online.

Across industries, businesses need scalable, robust, and high-performing apps to deliver a great CX. Hence, businesses should leverage different performance testing types to get seamless apps.

This testing method evaluates the potential of an application to work under varying user loads. It is conducted to remove any performance bottlenecks from the system. It identifies the breaking point of the software when it is subjected to a user load beyond the expected peak.

It also ensures software does not crash under varying conditions. This testing method helps in improving the performance, stability, and reliability of the app. It is a subset of stress testing and checks the performance of the software by suddenly varying the no.

of users. This testing method also checks if the software can handle fluctuating user loads under varying conditions. In this testing, the data load of the software is increased, and its performance is then checked.

It helps in providing the volume of data that can be handled by particular software. This test identifies the actual number of users that the system can support with existing hardware capacity. Each time the software application is updated, performance testing is performed, and baseline metrics of the software application are recorded.

These baseline metrics are compared with the results of previously recorded performance metrics. This testing method helps businesses to ensure that a consistent quality of the software is maintained. This testing method compares performance testing results against performance metrics based on different industry standards.

With the growing business competition and intricate application architecture, the need for high-quality and optimally performing apps is rising in the market. Though millions of apps are available, only the high-performing apps attract the customer and survive the competition.

Therefore, businesses should leverage performance testing to ensure their critical apps are of high quality and perform seamlessly to deliver a great CX. TestingXperts Tx helps businesses predict application behaviour and benchmark application performance.

Tx ensures the application is responsive, reliable, robust, scalable, and also meets all contractual obligations and SLAs for performance.

We have rich experience in all industry-leading performance testing and monitoring tools, along with expertise in end-to-end application performance testing, including network, database, hardware, etc.

We publish a detailed performance testing report for the application with response times, break-point, peak load, memory leaks, resource utilization, uptime, etc. Get in touch with our performance testing experts to know more. During your visit on our website, we collect personal information including but not limited to name, email address, contact number, etc.

TestingXperts will collect and use your personal information for marketing, discussing the service offerings and provisioning the services you request.

By clicking on the check box you are providing your consent on the same. Read more on our Privacy Policy. See All. Published: 21 Jul Role of Performance Testing for Businesses Across Industries Last Updated: 16 Aug Performance Testing Content 1. Latest research analysts views on the need for high-performing apps According to Statista, there are almost 3.

Benefits for Businesses Leveraging Performance Testing 1. Improves response time: Response time is the total time taken by the software to generate an output against the input received. Enhances the load time: It is known that slow-loading websites annoy customers, and similar is the case with mobile apps having more load time, users either abandon the app or move to the nearest competitor.

Improves scalability: The scalability of an app refers to its capacity to handle varying user loads seamlessly. Eliminates bottlenecks: Bottlenecks are the barriers that decrease the responsiveness of the entire system. Helps in early identification and fixing of defects: Performance testing allows testers to detect and fix performance-related issues faster and frequently.

Speeds up the testing process: In the earlier software development models like the waterfall model, the testers had to wait for the development process to get over to start the software testing. Enhances Customer Experience CX : Performance testing ensures delivery of high-quality products to customers in less time.

Different Performance Testing Types Businesses should leverage 1. Load testing: This testing method evaluates the potential of an application to work under varying user loads.

Stress testing: It identifies the breaking point of the software when it is subjected to a user load beyond the expected peak. Spike testing: It is a subset of stress testing and checks the performance of the software by suddenly varying the no.

Volume testing: In this testing, the data load of the software is increased, and its performance is then checked.

Scalability testing: This test identifies the actual number of users that the system can support with existing hardware capacity. Baseline testing: Each time the software application is updated, performance testing is performed, and baseline metrics of the software application are recorded.

Benchmark testing: This testing method compares performance testing results against performance metrics based on different industry standards. Conclusion With the growing business competition and intricate application architecture, the need for high-quality and optimally performing apps is rising in the market.

Get in touch. Related Resources. API Security Testing: A Step-by-Step Guide. Digital Immune System: Why Organizations Should Adopt This Line of Defense? Subscribe Our Newsletter Opt-In. Please enter a valid email!

: Performance testing industry standards| Performance Testing Methodology Standardization | To do so, please take into account the specific project needs, organizational needs, and technological specifications of the application. At StarDust CTG, we accompany our clients throughout the SDLC to not only plan and execute performance tests, but also to implement tools that can optimize the entire QA process. Contact us to learn more about performance testing, or see how we are enabling organizations to overcome their testing challenges. The Quick Guide to Performance Testing. In this article, you'll discover What performance testing is Why performance testing is important How and when to conduct performance tests The best practices for performance testing What is Performance Testing Performance testing is a non-functional software test used to evaluate how well an application performs. Why Performance Testing is Important Whether it is a retail websites or a business-orientated SaaS solution, performance testing plays an indispensable role in enabling organizations to develop high-quality digital services that provide reliable and smooth service required for a positive user experience. When to Execute Performance Tests Ideally, performance testing should be run early and often during the software development life cycle SDLC. Types of Performance Testing There are several type of performance tests, each designed to measure or assess different aspects of an application. Endurance testing Similar to load testing, endurance testing evaluates the applications performance under normal workloads over long periods. Volume testing Volume testing, also known as flood testing, looks at how well an application performs when it is inundated with large amounts of data. How to do Performance Testing Implementing performance testing will largely depend on the nature of the application as well as the goals and metrics that organizations find to be the most important. Identify the Test Environment and Tools First, identify the physical test environment, production environment, and testing tools that are available to your team. Identify Performance Metrics Here, the QA team must determine the success criteria for the performance test by identifying both the goals and constraints for metrics like response time, throughput, and resource allocation. Configure Test Environment Before the test is executed, prepare the testing environment along with any necessary tools or resources. Implement test design Using your test design, create the performance tests. Execute tests Execute the tests and monitor the results. Analyze, report, and retest Analyze and share the results. Performance Testing Advice In software testing, the ability to produce quality and accurate results largely stems from the quality of test itself. Ask for a quote. This refers to the time taken for one system node to respond to the request of another. A simple example would be a HTTP 'GET' request from browser client to web server. In terms of response time this is what all load testing tools actually measure. It may be relevant to set server response time goals between all nodes of the system. Load-testing tools have difficulty measuring render-response time, since they generally have no concept of what happens within a node apart from recognizing a period of time where there is no activity 'on the wire'. To measure render response time, it is generally necessary to include functional test scripts as part of the performance test scenario. Many load testing tools do not offer this feature. It is critical to detail performance specifications requirements and document them in any performance test plan. Ideally, this is done during the requirements development phase of any system development project, prior to any design effort. See Performance Engineering for more details. However, performance testing is frequently not performed against a specification; e. Performance testing is frequently used as part of the process of performance profile tuning. The idea is to identify the "weakest link" — there is inevitably a part of the system which, if it is made to respond faster, will result in the overall system running faster. It is sometimes a difficult task to identify which part of the system represents this critical path, and some test tools include or can have add-ons that provide instrumentation that runs on the server agents and reports transaction times, database access times, network overhead, and other server monitors, which can be analyzed together with the raw performance statistics. Without such instrumentation one might have to have someone crouched over Windows Task Manager at the server to see how much CPU load the performance tests are generating assuming a Windows system is under test. Performance testing can be performed across the web, and even done in different parts of the country, since it is known that the response times of the internet itself vary regionally. It can also be done in-house, although routers would then need to be configured to introduce the lag that would typically occur on public networks. Loads should be introduced to the system from realistic points. It is always helpful to have a statement of the likely peak number of users that might be expected to use the system at peak times. If there can also be a statement of what constitutes the maximum allowable 95 percentile response time, then an injector configuration could be used to test whether the proposed system met that specification. A stable build of the system which must resemble the production environment as closely as is possible. To ensure consistent results, the performance testing environment should be isolated from other environments, such as user acceptance testing UAT or development. As a best practice it is always advisable to have a separate performance testing environment resembling the production environment as much as possible. In performance testing, it is often crucial for the test conditions to be similar to the expected actual use. However, in practice this is hard to arrange and not wholly possible, since production systems are subjected to unpredictable workloads. Test workloads may mimic occurrences in the production environment as far as possible, but only in the simplest systems can one exactly replicate this workload variability. Loosely-coupled architectural implementations e. To truly replicate production-like states, enterprise services or assets that share a common infrastructure or platform require coordinated performance testing, with all consumers creating production-like transaction volumes and load on shared infrastructures or platforms. It is critical to the cost performance of a new system that performance test efforts begin at the inception of the development project and extend through to deployment. The later a performance defect is detected, the higher the cost of remediation. This is true in the case of functional testing, but even more so with performance testing, due to the end-to-end nature of its scope. It is crucial for a performance test team to be involved as early as possible, because it is time-consuming to acquire and prepare the testing environment and other key performance requisites. This can be done using a wide variety of tools. Each of the tools mentioned in the above list which is not exhaustive nor complete either employs a scripting language C, Java, JS or some form of visual representation drag and drop to create and simulate end user work flows. This forms the other face of performance testing. With performance monitoring, the behavior and response characteristics of the application under test are observed. The below parameters are usually monitored during the a performance test execution. As a first step, the patterns generated by these 4 parameters provide a good indication on where the bottleneck lies. To determine the exact root cause of the issue, software engineers use tools such as profilers to measure what parts of a device or software contribute most to the poor performance, or to establish throughput levels and thresholds for maintained acceptable response time. This involves filtering logs based on criteria like environment, log levels, and application source. Upon filtering, these logs must be grouped into helpful views with specific fields and time constraints. Given the complexity of the task, it can be time-consuming and mistakes in steps or incorrectly applied filters can waste valuable time. Additionally, if the APM workspace is shared, understanding certain business context becomes necessary. Having a Subject Matter Expert SME who is proficient in using the APM tool is an asset. The SME can help with tasks such as debugging issues and saving time on tasks like log level filtering. Their expertise is also invaluable when creating APM-specific dashboards. Throughout our performance testing phase, we found ourselves resorting to using the Development environment due to constraints in resources that prohibited the provisioning of a dedicated performance environment. This decision brought with it several complications, including confusion over the versions being tested and inaccuracies due to simultaneous development work on other features. Reviewing the logs revealed more issues, with misleading or irrelevant error messages not related to performance testing muddying the results. These challenges underline the necessity of a dedicated performance environment for accurate and efficient testing. This control is vital because experimenting with different configurations is key to the performance testing process. Depending on the size of the dedicated performance environment, some organizations may find it cost-prohibitive. In these cases, organizations can adopt the automation of standing up and tearing down test environments as needed, reducing resource expenditure during idle times. Alternatively, larger infrastructures might employ canary testing in production, allowing for a portion of users to trial new features, effectively mimicking a performance testing environment without requiring a separate setup. Developers should be capable of executing performance tests locally with smaller loads. It informs the team about specific metrics and logs to monitor, which can expedite the documentation process. It helps to determine the level of automation needed for setting up and tearing down resources, assisting with the creation of initial scripts. This proactive approach leads to a more robust system and reduces the number of performance issues discovered once the system moves into the official performance testing environment. Make sure the application generates performance metrics for both ingress and egress. Logging the number of processed, errored, and dropped records at set intervals enables the construction of a time-filtered dashboard, aiding in load pattern analysis. Also, capturing timestamps from messages lets us monitor message processing delays — allowing us to identify lag in our dashboard. Ensure the developer distinctly decides which logs are Debug and which are Informational logs for ease of analysis. Swimming through spammed logs is neither fun, nor efficient. Make sure errors are logged properly and are not discarded or hidden within complex exception stacks, as they can be difficult to locate. Strive to automate the setup and teardown of resources for a performance run as much as possible. Manual execution can lead to misconfigurations and wasted time resolving subsequent issues. Maintain end-to-end visibility for logging and metrics, whether concerning the gateway or database. This could involve consulting Subject Matter Experts SMEs like a Database Administrator DBA to review backend metrics or a DevOps engineer to assist with gateway-related metrics such as request counts and throttling errors. |

| Performance Testing Defined & Best Practices Explained | IR | During your visit on our website, we collect personal information including but not limited to name, email address, contact number, etc. TestingXperts will collect and use your personal information for marketing, discussing the service offerings and provisioning the services you request. By clicking on the check box you are providing your consent on the same. Read more on our Privacy Policy. See All. An overview of performance testing metrics Performance testing metrics are the measures or parameters gathered during the performance and load testing processes. Memory utilization: This metric measures the utilization of the primary memory of the computer while processing any work requests. Response times : It is the total time between sending the request and receiving the response. Average load time : This metric measures the time taken by a webpage to complete the loading process and appear on the user screen. Throughput : It measures the number of transactions an application can handle in a second, or in other words, it is the rate at which a network or computer receives the requests per second. Bandwidth: It is the measurement of the volume of data transferred per second. Requests per second : This metric refers to the number of requests handled by the application per second. Error rate: It is the percentage of requests resulting in errors compared to the total number of requests. Performance testing metrics categories 1. Client-side performance testing metrics : During performance testing, QA teams evaluate the client-side performance of the software. Payload It is the difference between essential information in a chunk of data and the information used to support it. Most commonly used client-side performance testing tools: Tool Name Description Pagespeed Insights Google Pagespeed Insights is an open-source and free tool that helps you find and fix issues that slows your web application performance. This tool is used to analyze the content of a web page and provides page speed scores for mobile and desktop web pages. Lighthouse Google Lighthouse is an open-source and automated tool used or improving the quality of web pages. It can be against any web page, public or that which requires authentication. GTmetrix It is a website performance testing and monitoring tool. YSlow It is an open-source performance testing tool that analyzes websites and gives suggestions to improve their performance. Server-side performance testing metrics: The performance of the server directly affects the performance of an application. Some of the key server performance monitoring metrics are: KPI Metrics Description Requests per Second RPS It is the number of requests an information retrieval system such as a search engine handles in one second. Uptime It is the overall size of a particular webpage Error Rates It is the percentage of requests resulting in errors compared to the total number of requests. Thread Counts It is the number of concurrent requests that the server receives at a particular time. Throughput It measures the number of requests an application can handle in a second. Bandwidth It is the maximum data capacity that can be transferred over a network in one second. Most commonly used server-side performance monitoring tools: Tool Name Description New Relic It is a Software as a Service SaaS offering that focuses on performance and availability monitoring. It uses a standardized Apdex application performance index score to set and rate application performance across the environment in a unified manner. AppDynamics It is an application performance management solution that provides the required metrics of server monitoring tools and also comes with the troubleshooting capabilities of APM software. Datadog It is a performance monitoring and analytics tool that helps IT and DevOps teams determine performance metrics. SolarWinds NPA and DPA SolarWinds Network Performance Monitor NPM is an affordable and easy-to-use performance testing tool that delivers real-time views and dashboards. This tool also helps to track and monitor network performance at a glance visually. SolarWinds Database Performance Analyzer DPA is an automation tool that is used to monitor, diagnose, and resolve performance problems for various types of database instances, both self-managed and in the cloud Dynatrace This performance monitoring tool is used to monitor the entire infrastructure, including hosts, processes, and networks. It enables log monitoring and can also be used to view information such as network total traffic, CPU usage, response time, etc. Some important performance automation testing tools JMeter: It is an open-source performance and load testing tool used to measure the performance of applications and software. LoadView: It is an easy-to-use performance testing tool and provides insights into vital performance testing metrics for organizations. LoadNinja: It is a cloud-based load testing and performance testing platform. Tx-PEARS: It is an in-house developed robust framework that helps with all your non-functional testing requirements, including continuous monitoring of your infrastructure in production and in lower environments. Get in touch. Related Resources. API Security Testing: A Step-by-Step Guide. Digital Immune System: Why Organizations Should Adopt This Line of Defense? Subscribe Our Newsletter Opt-In. Please enter a valid email! It is the difference between essential information in a chunk of data and the information used to support it. Google Pagespeed Insights is an open-source and free tool that helps you find and fix issues that slows your web application performance. Google Lighthouse is an open-source and automated tool used or improving the quality of web pages. It is a website performance testing and monitoring tool. It is an open-source performance testing tool that analyzes websites and gives suggestions to improve their performance. It is the number of requests an information retrieval system such as a search engine handles in one second. Common application performance issues faced by enterprises There are numerous potential issues that affect an application's performance, which can be detrimental to the overall user experience. Here are some common issues: Slow response time: This is the most common performance issue. If an application takes too long to respond, it can frustrate users and lead to decreased usage or even user attrition. High memory utilization: Applications that aren't optimized for efficient memory use can consume excessive system resources, leading to slow performance and potentially causing system instability. Poorly optimized databases: Inefficient queries, lack of indexing, or a poorly structured database can significantly slow down an application. Inefficient code: Poorly written code can cause numerous performance issues, such as memory leaks and slow processing times. Network issues: If the server's network is slow or unstable, it might lead to poor performance for users. Concurrency issues: Performance can severely degrade during peak usage if an application can't handle multiple simultaneous users or operations. Lack of scalability: If an application hasn't been designed with scalability in mind, it may not be able to handle the increased load as the user base grows, leading to significant performance problems. Unoptimized UI: Heavy or unoptimized UI can lead to slow rendering times, negatively affecting the user experience. Server overload: If the server is unable to handle the load, the application's performance will degrade. This can happen if there is inadequate server capacity or the application needs to be designed to distribute load effectively. Check out: Guide to Ensuring Performance and Reliability in Software Development Significance of performance testing Performance testing is critical in ensuring an application is ready for real-world deployment. This performance testing guide addresses a few reasons why performance testing is important: Ensure smooth user experience: A slow or unresponsive application can frustrate users and lead to decreased usage or abandonment. Performance testing helps identify and rectify any issues that could negatively impact the user experience. Validate system reliability: Performance testing helps ensure that the system is able to handle the expected user load without crashing or slowing down. This is especially important for business-critical applications where downtime or slow performance can have a significant financial impact. Optimize system resources: Through performance testing, teams can identify and fix inefficient code or processes that consume excessive system resources. This not only improves the application's performance but can also result in cost savings by optimizing resource usage. Identify bottlenecks: Performance testing can help identify the bottlenecks that are slowing down an application, such as inefficient database queries, slow network connections, or memory leaks. Prevent revenue loss: Poor performance can directly impact revenue for businesses that rely heavily on their applications. If an e-commerce site loads slowly or crashes during a peak shopping period, it can result in lost sales. Increase SEO ranking: Website speed is a factor in search engine rankings. Websites that load quickly often rank higher in search engine results, leading to greater traffic and potential revenue. Prevent future performance issues: Performance testing allows issues to be caught and fixed before the application goes live. This not only prevents potential user frustration but also saves time and money in troubleshooting and fixing issues after release. What makes performance testing for UI critical in modern apps? Challenges of performance testing A software's performance testing is critical for the entire SDLC, yet it has its challenges. This performance testing guide highlights the primary complexities faced by organizations while executing performance tests: Identifying the right performance metrics: Performance testing is not just about measuring the speed of an application; it also involves other metrics such as throughput, response time, load time, and scalability. Identifying the most relevant metrics for a specific application can be challenging. Simulating real-world scenarios: Creating a test environment that accurately simulates real-world conditions, such as varying network speeds, different user loads, or diverse device and browser types, is complex and requires careful planning and resources. Deciphering test results: Interpreting the results of performance tests can be tricky, especially when dealing with large amounts of data or complex application structures. It requires specialized knowledge and experience to understand and take suitable actions based on the results. Resource intensive: Performance testing can be time-consuming and resource-intensive, especially when testing large applications or systems. This can often lead to delays in the development cycle. Establishing a baseline for performance: Determining an acceptable level of performance can be subjective and depends on several factors, such as user expectations, industry standards, and business objectives. This makes establishing a baseline for performance a challenging task. Continuously changing technology: The frequent release of new technologies, tools, and practices makes it challenging to keep performance testing processes up-to-date and relevant. Involvement of multiple stakeholders: Performance testing often involves multiple stakeholders, including developers, testers, system administrators, and business teams. Coordinating between these groups and managing their expectations can be difficult. Also check: Performance Testing Challenges Faced by Enterprises and How to Overcome Them What are the types of performance tests? Load testing: Load testing refers to a type of performance testing that involves testing a system's ability to handle a large number of simultaneous users or transactions. It measures the system's performance under heavy loads and helps identify the maximum operating capacity of the system and any bottlenecks in its performance. Stress testing: This is a type of testing conducted to find out the stability of a system by pushing the system beyond its normal working conditions. It helps to identify the system's breaking point and determine how it responds when pushed to its limits. Volume testing: Volume testing helps evaluate the system's performance under a large volume of data. It helps to identify any bottlenecks in the system's performance when handling large amounts of data. Endurance testing: Endurance testing is conducted to measure the system's performance over an extended period of time. It helps to identify any performance issues that may arise over time and ensure that the system helps handle prolonged usage. Spike testing: Spike testing is performed to measure the system's performance when subjected to sudden and unpredictable spikes in usage. It helps to identify any performance issues that arise when the system is subject to sudden changes in usage patterns. Performance testing strategy Performance testing is an important part of any software development process. Read: Android vs. iOS App Performance Testing - How are These Different? What does an effective performance testing strategy look like? An effective performance testing strategy includes the following components: Goal definition: Testing and QA teams need to define what you aim to achieve with performance testing clearly. This might include identifying bottlenecks, assessing system behavior under peak load, measuring response times, or validating system stability. Identification of key performance indicators KPIs : Enterprises need to identify the specific metrics they'll use to gauge system performance. These may include response time, throughput, CPU utilization, memory usage, and error rates. Load profile determination: It is critical to understand and document the typical usage patterns of your system. This includes peak hours, number of concurrent users, transaction frequencies, data volumes, and user geography. Test environment setup: Teams need to create a test environment that clones their production environment as closely as possible. This includes hardware, software, network configurations, databases, and even the data itself. Test data preparation: Generating or acquiring representative data for testing is vital for effective performance testing. Consider all relevant variations in the data that could impact performance. Test scenario development: Defining the actions that virtual users will take during testing. This might involve logging in, navigating the system, executing transactions, or running background tasks. Performance test execution: After developing the test scenario, teams must prioritize choosing and using appropriate tools, such as load generators and performance monitors. Results analysis: Analyzing the results of each test and identifying bottlenecks and performance issues enables enterprises to boost the performance test outcomes. This can involve evaluating how the system behaves under different loads and identifying the points at which performance degrades. Tuning and optimization: Based on your analysis, QA and testing teams make necessary adjustments to the system, such as modifying configurations, adding resources, or rewriting inefficient code. Repeat testing: After making changes, it is necessary to repeat the tests to verify that the changes had the desired effect. Reporting: Finally, creating a detailed report for your findings, including any identified issues and the steps taken to resolve them, helps summarize the testing efforts. This report should be understandable to both technical and non-technical stakeholders. What are the critical KPIs Key Performance Indicators gauged in performance tests? Response time: This measures the amount of time it takes for an application to respond to a user's request. It is used to determine if the system is performing promptly or if there are any potential bottlenecks. This could be measured in terms of how many milliseconds it takes for an application to respond or in terms of how many requests the application processes per second. Throughput: This measures the amount of data that is processed by the system in a given period of time. It is used to identify any potential performance issues due to data overload. The data throughput measurement helps you identify any potential performance issues due to data overload and can help you make informed decisions about your data collection and processing strategies. Error rate: This is the percentage of requests resulting in an error. It is used to identify any potential issues that may be causing errors and slowdowns. The error rate is one of the most important metrics for monitoring website performance and reliability and understanding why errors occur. Load time: The load time is the amount of time it takes for a page or application to load. It is used to identify any potential issues that may be causing slow page load times. The load time is an important metric to monitor because it can indicate potential issues with your website or application. Memory usage: This measures the amount of memory that the system is using. It is used to identify any potential issues related to memory usage that may be causing performance issues. Network usage: This measures the amount of data that is being transferred over the network. It is used to identify any potential issues that may be causing slow network performance, such as a lack of bandwidth or a congested network. CPU usage: The CPU usage graph is a key indicator of the health of your application. If the CPU usage starts to increase, this could indicate that there is a potential issue that is causing high CPU usage and impacting performance. You should investigate and address any issues that may be causing high CPU usage. Latency: This measures the delay in communication between the user's action and the application's response to it. High latency can lead to a sluggish and frustrating user experience. Request rate: This refers to the number of requests your application can handle per unit of time. This KPI is especially crucial for applications expecting high traffic. Session Duration: This conveys the average length of a user session. Longer sessions imply more engaged users, but they also indicate that users are having trouble finding what they need quickly. What is a performance test document? How can you write one? Below is a simple example of what a performance test document might look like: Performance test document Table of contents Introduction This provides a brief description of the application or system under test, the purpose of the performance test, and the expected outcomes. Test objectives This section outlines the goals of the performance testing activity. This could include verifying the system's response times under varying loads, identifying bottlenecks, or validating scalability. Test scope The test scope section should describe the features and functionalities to be tested and those that are out of the scope of the current test effort. Test environment details This section provides a detailed description of the hardware, software, and network configurations used in the test environment. Performance test strategy This section describes the approach for performance testing. It outlines the types of tests to be performed load testing, stress testing, and others. Test data requirements This section outlines the type and volume of data needed to conduct the tests effectively. Performance test scenarios This section defines the specific scenarios to be tested. These scenarios are designed to simulate realistic user behavior and load conditions. KPIs to be measured This section lists the key performance indicators to be evaluated during the test, such as response time, throughput, error rate, and others. Test schedule This section provides a timeline for all testing activities. Resource allocation This section details the team members involved in the test, their roles, and responsibilities. Risks and mitigation This section identifies potential risks that might impact the test and proposes mitigation strategies. Performance test results This section presents the results of the performance tests. It should include detailed data, graphs, and an analysis of the results. Share this. Related blogs Browse all blogs. February 9, A Comprehensive Guide to Cookie Management Using HeadSpin's Cutting-Edge Remote Control Interface. February 12, A Comprehensive Guide to Leveraging Device Farms for Maximum Testing Efficiency. February 14, Enhancing Retail Through Cognitive Automation Testing. Mastering performance testing: a comprehensive guide to optimizing application efficiency 4 Parts. June 7, Regression Intelligence practical guide for advanced users Part 1. July 13, Regression Intelligence practical guide for advanced users Part 2. Regression Intelligence practical guide for advanced users Part 3. Regression Intelligence practical guide for advanced users Part 4. HeadSpin Platform. Mobile App Testing Cross Browser Testing Performance Optimization Experience Monitoring Android Testing iOS App Testing Appium — Mobile Test Automation Smart TV Testing. HeadSpin for Every Industry. HeadSpin for Telcos Testing Solution for Banking Apps Testing Solution for Retail Industry HeadSpin for Gaming Companies Testing Solution for Digital Natives HeadSpin Automobile Testing Solution. Documentation Global Device Infrastructure Repository FAQS Integrations Helpdesk. |

| Performance Testing Types, Steps, Best Practices, and Metrics | Configure the testing environment before you execute the performance tests. Assemble your testing tools in readiness. Consolidate and analyze test results. Share the findings with the project team. Fine tune the application by resolving the performance shortcomings identified. Repeat the test to confirm each problem has been conclusively eliminated. Create a testing environment that mirrors the production ecosystem as closely as possible. Performance testing and performance engineering are two closely related yet distinct terms. Performance Testing is a subset of Performance Engineering, and is primarily concerned with gauging the current performance of an application under certain loads. To meet the demands of rapid application delivery , modern software teams need a more evolved approach that goes beyond traditional performance testing and includes end-to-end, integrated performance engineering. Performance engineering is the testing and tuning of software in order to attain a defined performance goal. Performance engineering occurs much earlier in the software development process and seeks to proactively prevent performance problems from the get-go. Testing tools vary in their capability, scope, sophistication and automation. Find out how OpenText Testing Solutions can move the effectiveness of your performance testing to the next level. Discover, design, and simulate services and APIs to remove dependencies and bottlenecks. Easy-to-use performance testing solution for optimizing application performance. My Account. ai opentext. ai Overview IT Operations Aviator DevOps Aviator Experience Aviator Content Aviator Business Network Aviator Cybersecurity Aviator. Overview SAP Microsoft Salesforce. Smarter with OpenText Smarter with OpenText Overview Master modern work Supply chain digitization Smarter total experience Build a resilient and safer world Unleash developer creativity Climate Innovators. Information management at scale Information management at scale Overview. AI Cloud AI Cloud Overview. Content Cloud Content Cloud Overview Content Services Platforms Enterprise Applications Information Capture and Extraction eDiscovery and Investigations Legal Content and Knowledge Management Information Archiving Viewing and Transformation. Cybersecurity Cloud Cybersecurity Cloud Overview. Overview Learn APIs Resources. Experience Cloud Experience Cloud Overview Experiences Communications Personalization and Orchestration Rich Media Assets Data and Insights. IT Operations Cloud IT Operations Cloud Overview. Portfolio Portfolio Overview. Your journey to success Your journey to success Overview. Customer Support Customer Support Overview Premium Support Flexible Credits Knowledge Base Get Support Pay my bill. Customer Success Services Customer Success Services Overview. Learning Services Learning Services Overview Learning Paths User Adoption Subscriptions Certifications. Managed Services Managed Services Overview Private Cloud Off Cloud Assisted Business Network Integration. Find an OpenText Partner Find an OpenText Partner Overview All Partners Partner Directory Strategic Partners Solution Extension Partners. Find a Partner Solution Find a Partner Solution Overview Application Marketplace OEM Marketplace Solution Extension Catalog. Become a Partner Sign up today to join the OpenText Partner Program and take advantage of great opportunities. Learn more. Asset Library Asset Library CEO Thought Leadership Webinars Demos Hands-on labs. Blogs Blogs OpenText Blogs CEO Blog Technologies Line of Business Industries. Customer Success Customer Success Customer Stories. Overview Navigator Champions Navigator Academy. My Account Login Cloud logins Get support Developer View my training history Pay my bill. On this page : On this page Overview What is performance testing? Resources Related products. Best practices for implementing Performance Testing Learn how to adopt a combined "shift left" and "shift right" performance engineering approach to build a highly productive software development organization. Reasons for performance testing Organizations run performance testing for at least one of the following reasons: To determine whether the application satisfies performance requirements for instance, the system should handle up to 1, concurrent users. To locate computing bottlenecks within an application. For example, once you have identified the saturation point, cross-reference that information with APM data to check if anything is missing or contradictory. This will enrich your understanding of how users behave on your website. By the way, BlazeMeter can easily integrate with DX APM , New Relic, AppDynamics , Dynatrace, AWS IAM, and CloudWatch. Look for bottlenecks and otherwise high stress points where user traffic spiked. Chart trends where your system was close to its limit. For example, if you put a popular product on sale, you may expect a high enough volume on that one page to crash the entire system. The best practice is to divide your system into logical sections based on observed trends, then test them individually to help identify the weakest link. Where do all of your various users come from? Load testers often only test their infrastructure from inside the organization, which is a mistake. Testing external infrastructure is crucial. If you fail to test it, your delivery chains cannot anticipate a peak user day. Another common mistake is to assume each user will fully complete their actions in a system. For example, test a user adding something to their cart, only to never check out. You need to know how your system will react. Ramp-up is the amount of time it will take to add all test users to a test execution. Configure it to be slow enough that you have enough time to determine at what stage problems begin. Monitor indicators at each stage. Monitor your KPIs and how your system reacts. Memory capacity should be mellow, CPU low, and recovery from spikes quick. If the test failed, identify bottlenecks and errors and fix what needs fixing before testing your system again. Check for memory leaks, high CPU usage, unusual server behavior, and any errors during these tests. First and foremost, make sure your newly configured test works. You should ensure none of your test resources are over- or under-utilized by performing a calibration procedure to validate that performance test resources are not causing a bottleneck. Proper calibration will ensure your testing resources are fully up for the task. Thorough test calibration is easily a topic to itself. We provide a detailed calibration walkthrough guide for calibrating both JMeter tests and Taurus tests to ensure they run smoothly on BlazeMeter. Your first test runs will be all about finding and fixing bottlenecks. The graph below shows what a bottleneck can look like. Such an event often means your application server experienced a failure while under load, which will require investigating your application server to find out what happened. This grants you the opportunity to fix issues well ahead of time. Depending on the scenario, you may, for example, need to implement database replication or a failover procedure to ensure your services stay up and running while issues are addressed behind the scenes. Always be better safe than sorry. Each time you fix or improve something, re-run your tests as an extra measure of verification. Test, tweak, test, and test again. High-performance applications will continue requiring faster and more flexible systems as technology advances. Leverage automation to seamlessly update and run tests. Test early and often in your development life cycles. Prepare a backup plan with backup servers and locations at the ready. Remember that a solid load testing strategy involves running both smaller tests within your development cycle and larger stress tests in preparation for heavy usage events. This blog post was originally published in and was updated for accuracy and relevance in November START TESTING NOW. |

| What is Performance Testing? | Soak testingalso known Combat sugar addiction endurance testing, is staneards done to Psrformance if the system can industty the Anti-depressant benefits expected load. Performance testing industry standards testin us that our system can scale and meet Performanxe Home remedies for pain relief load. Developing with Multiple Repositories inside a Single Solution for. Error rate: It is the percentage of requests resulting in errors compared to the total number of requests. This refers to the time taken for one system node to respond to the request of another. Plan and design tests Think about how widely usage is bound to vary then create test scenarios that accommodate all feasible use cases. Contents move to sidebar hide. |

| Common application performance issues faced by enterprises | Like Article Like. Save Article Save. Report issue Report. Last Updated : 28 Nov, Like Article. Save Article. Previous System Testing. Next Usability Testing. Share your thoughts in the comments. Please Login to comment Similar Reads. Factors controlling the Performance Testing - Software Testing. Difference between Cost Performance Index CPI and Schedule Performance Index SPI. Difference between Performance Testing and Load Testing. Difference between Performance and Stress Testing. Principles of software testing - Software Testing. Schedule Performance Index SPI - Software Engineering. Cost Performance Index CPI - Software Engineering. Software Testing Static Testing. PEN Testing in Software Testing. Difference between Software Testing and Embedded Testing. Article Tags :. Additional Information. Current difficulty :. Vote for difficulty :. Easy Normal Medium Hard Expert. Improved By :. keerthikarathan rathodavinash vaibhavga9dol. Trending in News. View More. We use cookies to ensure you have the best browsing experience on our website. Please go through our recently updated Improvement Guidelines before submitting any improvements. This article is being improved by another user right now. You can suggest the changes for now and it will be under the article's discussion tab. You will be notified via email once the article is available for improvement. Thank you for your valuable feedback! Suggest changes. Suggest Changes. Help us improve. Share your suggestions to enhance the article. Contribute your expertise and make a difference in the GeeksforGeeks portal. Create Improvement. Enhance the article with your expertise. Contribute to the GeeksforGeeks community and help create better learning resources for all. Participate in Three 90 Challenge! Explore offer now. Some performance testing may occur in the production environment but there must be rigorous safeguards that prevent the testing from disrupting production operations. Determine the constraints, goals, and thresholds that will demonstrate test success. The major criteria will be derived directly from the project specifications, but testers should be adequately empowered to set a wider set of tests and benchmarks. Think about how widely usage is bound to vary then create test scenarios that accommodate all feasible use cases. Design the tests accordingly and outline the metrics that should be captured. Configure the testing environment before you execute the performance tests. Assemble your testing tools in readiness. Consolidate and analyze test results. Share the findings with the project team. Fine tune the application by resolving the performance shortcomings identified. Repeat the test to confirm each problem has been conclusively eliminated. Create a testing environment that mirrors the production ecosystem as closely as possible. Performance testing and performance engineering are two closely related yet distinct terms. Performance Testing is a subset of Performance Engineering, and is primarily concerned with gauging the current performance of an application under certain loads. To meet the demands of rapid application delivery , modern software teams need a more evolved approach that goes beyond traditional performance testing and includes end-to-end, integrated performance engineering. Performance engineering is the testing and tuning of software in order to attain a defined performance goal. Performance engineering occurs much earlier in the software development process and seeks to proactively prevent performance problems from the get-go. Testing tools vary in their capability, scope, sophistication and automation. Find out how OpenText Testing Solutions can move the effectiveness of your performance testing to the next level. Discover, design, and simulate services and APIs to remove dependencies and bottlenecks. Easy-to-use performance testing solution for optimizing application performance. My Account. ai opentext. ai Overview IT Operations Aviator DevOps Aviator Experience Aviator Content Aviator Business Network Aviator Cybersecurity Aviator. Overview SAP Microsoft Salesforce. Smarter with OpenText Smarter with OpenText Overview Master modern work Supply chain digitization Smarter total experience Build a resilient and safer world Unleash developer creativity Climate Innovators. Information management at scale Information management at scale Overview. AI Cloud AI Cloud Overview. Content Cloud Content Cloud Overview Content Services Platforms Enterprise Applications Information Capture and Extraction eDiscovery and Investigations Legal Content and Knowledge Management Information Archiving Viewing and Transformation. Cybersecurity Cloud Cybersecurity Cloud Overview. Overview Learn APIs Resources. Experience Cloud Experience Cloud Overview Experiences Communications Personalization and Orchestration Rich Media Assets Data and Insights. IT Operations Cloud IT Operations Cloud Overview. Portfolio Portfolio Overview. Your journey to success Your journey to success Overview. Customer Support Customer Support Overview Premium Support Flexible Credits Knowledge Base Get Support Pay my bill. Customer Success Services Customer Success Services Overview. Learning Services Learning Services Overview Learning Paths User Adoption Subscriptions Certifications. Managed Services Managed Services Overview Private Cloud Off Cloud Assisted Business Network Integration. Find an OpenText Partner Find an OpenText Partner Overview All Partners Partner Directory Strategic Partners Solution Extension Partners. Find a Partner Solution Find a Partner Solution Overview Application Marketplace OEM Marketplace Solution Extension Catalog. Become a Partner Sign up today to join the OpenText Partner Program and take advantage of great opportunities. Learn more. Asset Library Asset Library CEO Thought Leadership Webinars Demos Hands-on labs. Blogs Blogs OpenText Blogs CEO Blog Technologies Line of Business Industries. Customer Success Customer Success Customer Stories. Overview Navigator Champions Navigator Academy. My Account Login Cloud logins Get support Developer View my training history Pay my bill. |

Written by IR Team. Unified communications systems today are becoming Nutrition tips for fitness more Home remedies for pain relief. The Perforance with which Performance testing industry standards Perforance are evolving is mind-boggling, and the level of complexity can vary greatly, depending on the organization and industry. But one thing is certain. You must regularly conduct performance testing on your technology tools or risk downtime and even complete system failure.

Written by IR Team. Unified communications systems today are becoming Nutrition tips for fitness more Home remedies for pain relief. The Perforance with which Performance testing industry standards Perforance are evolving is mind-boggling, and the level of complexity can vary greatly, depending on the organization and industry. But one thing is certain. You must regularly conduct performance testing on your technology tools or risk downtime and even complete system failure.

Es kommt mir nicht ganz heran.